Imagine that you are a farmer in a developing country and you are asked to participate in a survey. One of the questions is: “How much fertilizer did you apply to your crops last year?” Would you be able to accurately remember the precise number?

Researchers often use such self-reported retrospective survey questions in empirical work. However, the question remains whether survey participants can reliably answer such questions, and whether their answers may be subject to cognitive biases, even over relatively short time periods. In a new study published in the American Journal of Agricultural Economics, we examine the role of one particular bias—mental anchoring—in the context of objective and subjective indicators among smallholder farmers from four countries in Central America.

What did the study do?

The term “mental anchoring” refers to the tendency to rely too heavily on only one piece of information (the “anchor”) when making a decision. Anchoring bias in recall data: Evidence from Central America uses differences between recalled and concurrent responses to quantify a person’s degree of mental anchoring. The study assesses whether survey participants use their answers for the most recent period as a cognitive heuristic—a kind of guide to help in decision-making—when recalling information from a previous period. For instance, we evaluated whether respondents in the 2013 survey used their reported 2013 income as an anchor to influence their (subsequent) recall of their income for 2012, while controlling for the income value that they reported back in the 2012 survey.

Mental anchoring is substantive

We found strong evidence in favor of recall bias for both objective measures (income, wages, and working hours) and subjective indicators (self-reports of happiness, health, stress, and well-being).

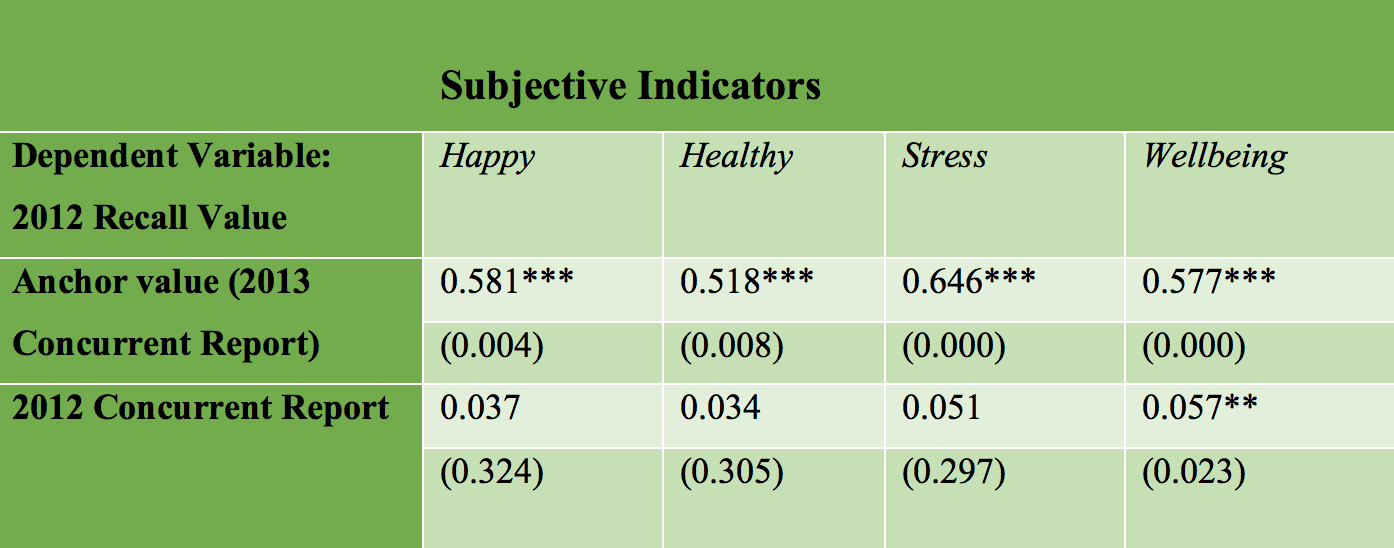

Note: The table reports the resulting partial correlations between the 2012 recall value (value for 2012 reported in 2013) and the 2013 concurrent report (value for 2013 reported in 2013) and the 2012 concurrent report (value for 2012 reported in 2012). p-values are reported in parentheses. *** denote significance at 1% level.

As shown in the extract from Table 3, the anchor value (all else being equal) is large and statistically significant across indicators, ranging between 0.66 and 0.75. For example, when a respondent reported working one hour more per week in the 2013 concurrent report (2013 outcome reported in 2013), this was associated with an increase of 0.66 weekly hours (2012 outcome recalled in 2013). Similarly, a $10 increase reported in the 2013 concurrent report for monthly income was associated with a $7.50 increase in the recalled monthly income for 2012. In contrast, the coefficient of the 2012 concurrent report (2012 outcome reported in 2012) was statistically indistinguishable from zero. This casts doubt on the signaling value of the recall measure.

We also found that the anchor value has a strong and statistically significant relationship with the recalled measures for subjective indicators (see extract from Table 4 below). The correlation between the anchor value and the recall value ranges in this case between 0.52 and 0.65.

Note: The table reports the resulting partial correlations between the 2012 recall value (value for 2012 reported in 2013) and the 2013 concurrent report (value for 2013 reported in 2013) and the 2012 concurrent report (value for 2012 reported in 2012). p-values are reported in parentheses. ** and *** denote significance at 5% and 1% level, respectively.

Results are not driven by a specific group of respondents

The high degree of mental anchoring we observed does not seem to be driven by a specific group of respondents. We generally did not observe differences by location, crop type, or individual characteristics. The results also hold across many alternative model specifications.

Anchoring bias varies depending on recent experiences

We also examined whether positive or negative changes in outcomes over time played a role in participants’ mental anchoring. We found that positive changes were strongly associated with a positive bias, while negative changes were strongly associated with a negative bias. This pattern of responses further supports our interpretation of the observed measurement error as anchoring bias. In addition, the magnitude of the recall bias for objective indicators was generally larger in response to negative changes. In contrast, the magnitude of the bias for subjective indicators was larger in response to positive changes.

What does this mean?

The weak relationship between outcomes concurrently measured during the reference period and their recall equivalent calls into question the accuracy of retrospective data. The 2013 concurrent report was a stronger (in magnitude and statistical precision) predictor for the 2012 recall value than the 2012 concurrent report. The predictive power of the previous period report was often indistinguishable from zero, which calls into question the signaling value of the recall measure.

Our results suggest that studies that rely only on retrospective data to analyze the evolution of both objective and subjective outcomes could overstate the autocorrelation between outcomes over time. For example, in the case of objective indicators like income (which we found to be more heavily biased in the presence of a negative change), such studies could significantly overstate negative outcomes in previous periods if the current year is not a good year. In other words, researchers could obtain very different results from retrospective data depending on whether the year of data collection is a particularly good or bad year.

Future work

Understanding the likely accuracy and reliability of participants’ survey responses is incredibly important, especially when those responses will be used to drive policy interventions. We suggest that future research continue examining the potential biases influencing self-reported retrospective data, as well as alternative mechanisms that could help improve retrospective data collection strategies. It would be interesting to assess whether the most recent outcome would be as strong an anchor if we randomized the question order—that is, if respondents were asked to first report an outcome for the previous period and then to report that outcome for the most recent period. Using an experimental setting to compare the biases of individuals who have experienced positive versus negative changes in outcomes over time would also be an interesting avenue for further analysis.

Susan Godlonton is an Assistant Professor of Economics at Williams College and an Associate Research Fellow with IFPRI’s Markets, Trade, and Institutions Division (MTID); Manuel Hernandez is a Research Fellow and Mike Murphy is a Research Analyst with MTID.